Gary Grider, leader of the High Performance Computing Division at Los Alamos National Laboratory (LANL) and Bruce Tulloch, CEO of BitScope Designs gave a joint press conference at SC17 last week to introduce the large Raspberry Pi cluster being built at Los Alamos National Laboratory in New Mexico. This 3000 core cluster is being developed as a test bed for exascale systems research.

Gary explained that the most significant problem faced by the system developers at LANL as they build the next generation cluster computing solutions is one of scale. When designing new machines that go beyond the 20,000 nodes of HPC clusters like Trinity, one faces implementation challenges for which we don't yet have answers.

Gary said "The problem is, the system software people, the people who write operating systems, network stacks, launch, boot and monitor and tools, things like that, it's very difficult for them to get access to a machine that has that many nodes, it's even more difficult to become root on that machine for any period of time because those machines are purchased to do science not to do systems software development."

Gary remarked, "The poor systems software developer has to figure out how to test their gear, their wares, on machines that are big. We get them shots on machines but they're very short".

So about 6 or 7 years ago Gary came up with program managed between Los Alamos, The National Science Foundation and the New Mexico Consortium where machines that were older and retired from science but which still had a lot of nodes would be used for systems software development and test.

Gary said "That worked for a while but the scales got to be so large that it wasn't really feasible to do 10,000 nodes or 20,000 nodes, the power to connect something like that is measured in the 8 to 10 megawatts or more range and the cooling, to cool that kind of stuff means lots and lots of water towers and all sorts of infrastructure, it's just not feasable to do".

Shown here is some of the power, air and water cooling infrastructure at Los Alamos National Laboratory that is required to support the operation of Trinity and their other large machines.

Gary continued, "so I've been trying to figure out how to build a very very large test bed and I finally stumbled on Raspberry Pi which is a very inexpensive, very low wattage, very well accepted computer and so I thought gosh if you could figure how to build a cluster out of those I might able to affort a 10,000 node cluster because it's not very expensive, I certainly could certainly afford the power because it's almost no power."

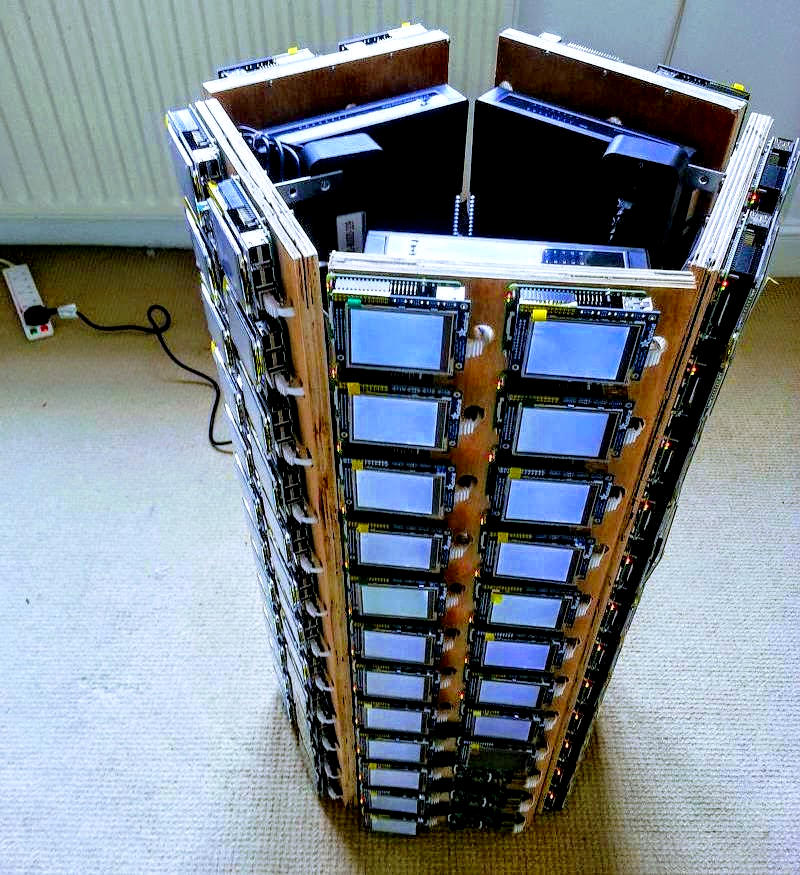

He went on "I looked around and I could not find any solutions that were packaged well, I saw a lot of people putting Raspberry Pis on cardbard and on plywood, building Christmas trees out of them and all kinds of things and that's cool and it's a really neat concept."

An example is the Beast, an interesting looking 120 node cluster of Raspberry Pi which was created by the team at resin.io but which is not well suited for LANL's HPC R&D purposes.

Gary continued "I really needed something more professionally done, something that I could package very densely so I could contemplate putting a 10,000 node cluster together in some very small amount of racks, maybe 8 or 10 racks or something, and with low power.

"I turned to SICORP, Jim Hetherington here is from SICORP, and asked them, okay is there anybody out there who could potentially make me a cluster, or a technology for clustering Raspberry Pi's together at pretty dense quantities."

Gary explained "Jim found BitScope, we have Bruce Tulloch here from BitScope, that actually designed and built a box to our needs. It's a 150 nodes per box and networks and fans and everything built into it, so in a standard rack unit you can put 150 nodes, so think a 1000, 1200 nodes in a rack, which is a lot of nodes in a rack, normally a 1200 node cluster would be 10 racks, instead it's one rack, normally it would need a lot of power and now it's a few kilowatts. It's very cost effective, it's very dense, it's very easy to power, it's very easy to build a big machine in this way, for this particular use, which is in system software development, system software management and scaling tests."

One of these 150 node Cluster Modules is shown here with Gary from LANL, Bruce and Norman from BitScope and Jim from SICORP just before the doors opened at SC17 last week. This module was hosted at the University of New Mexico Center for Advanced Research Computing Booth.

Gary introduced Bruce to talk about the design process for the cluster, BitScope's goals and how it may be used in other ways and in other markets beyond system development.

Bruce introduced BitScope Designs and explained the company's background in test, measurement and data acquisition. He said "we've done a lot of development of systems for computing in various ways but principly focused on data acquisition and in 2013 we noticed a rising star on the computing stage which was Raspberry Pi".

He went on to explain, "one of our key markets was, and always has been education, tertiary education, makers and those sort of things and we saw an opportunity for tools that engineers need to diagnose systems, mixed signal systems in particular, analog and digital systems, and we developed a mixed signal oscilloscope for the Raspberry Pi."

He said "We did a lot of systems software development on the Raspberry Pi platform which at that time was a very low powered computing platform. We formed the view that the Raspberry Pi had quite a lot of legs in it". He explained that Raspberry Pi has since become the third best selling general purpose computing platform of all time this year with sales of more than 14 million units.

Bruce then introduced BitScope Blade, the system used to create the Cluster Module.

Bruce said "One of the key issues when deploying small single board computers like the Raspberry Pi, particularly in industrial applications, is how to mount them and how to power them reliably".

Shown here is the cluster pack that was on display at the Department of Energy Booth at SC17. It's the sort used to build the Cluster Modules for LANL and comprises 15 Blade Quattro boards mounting 60 Raspberry Pi, all of which are powered via a single pair of 24V power wires connected to the pack.

Bruce noted that Blade made it possible to build the cluster modules quite easily. He said "in about three months we turned from concept to production five 150 node cluster modules for Gary's team".

Explaining the physical construction he said "the cluster modules we ended up producing are 6U high, about 36" deep, the have 144 active nodes, 6 spare nodes with one for cluster management and the whole thing is very self contained" capable of using 24VDC or 110~240V AC for power. He explained how the whole cluster can be bootstrapped from a single Micro SD card plugged into one of the nodes and how its power consumption and cooling requirements are vastly lower than similar scale HPC.

Bruce said Raspberry Pi was a good choice to build these clusters, "The benefit of Raspberry Pi is not that it is a super powerful computer, it isn't, but it is a computer that has a big mindshare in the developer community, it runs open software stacks around Linux which are very popular in HPC and it has a very large number of developers sharing application software and researchers publishing papers".

While well suited for R&D in large scale HPC system software design as Gary has identified, Bruce said BitScope has also been approached by many others looking at small scale cloud and edge computing, threat management and industrial control and monitoring systems using this system. He explained the use of standard switches makes the system very flexible allowing the system to be reconfigured. Bruce said "We have a lot interest from Universities, for example people in tertiary level teaching ARM CPU programming, or in CPU and cluster architecture, even in high school designs where you've got cloud resident 'Pi in the Sky' as we like to call it".

The press conference ended with questions from the floor which we'll post in a follow up blog.

GF13N